Section 1.1 What is Data?

Data comes from the Latin word, "datum," meaning a "thing given." Although the term "data" has been used since as early as the 1500s, modern usage started in the 1940s and 1950s as practical electronic computers began to input, process, and output data. This chapter discusses the nature of data and introduces key concepts for newcomers without computer science experience.

The inventor of the World Wide Web, Tim Berners-Lee, is often quoted as having said, "Data is not information, information is not knowledge, knowledge is not understanding, understanding is not wisdom." This quote suggests a kind of pyramid, where data are the raw materials that make up the foundation at the bottom of the pile, and information, knowledge, understanding and wisdom represent higher and higher levels of the pyramid. In one sense, the major goal of a data scientist is to help people to turn data into information and onwards up the pyramid. Before getting started on this goal, though, it is important to have a solid sense of what data actually are. (Notice that this book treats the word "data" as a plural noun in common usage you may often hear it referred to as singular instead.) Read on for an introduction to the most basic ingredient to the data scientist’s efforts: data.

A substantial amount of what we know and say about data in the present day comes from work by a U.S. mathematician named Claude Shannon. Shannon worked before, during, and after World War II on a variety of mathematical and engineering problems related to data and information. Not to go crazy with quotes, or anything, but Shannon is quoted as having said, "The fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected at another point." This means that if you came into a conversation in the middle, you would have a hard time reconstructing the beginning even approximately! This quote helpfully captures key ideas about data that are important in this book by focusing on the idea of data as a message that moves from a source to a recipient. Think about the simplest possible message that you could send to another person over the phone, via a text message, video conference or even in person. Let’s say that a family member had asked you a question, for example whether you wanted to eat fish for dinner that night. You can answer yes or no. You can call your family member on the phone, and say yes or no. You might have a bad connection, though, and your family member might not be able to hear you. Likewise, you could send them a text message with your answer, yes or no, and hope that they have their phone turned on so that they can receive the message. Or you could tell that family member face to face, hoping that they did not have their earbuds turned up so loud that they couldn’t hear you. In all three cases you have a one "bit" message that you want to send to your family member, yes or no, with the goal of "reducing their uncertainty" about whether you want fish for dinner. Assuming that message gets through without being garbled or lost, you will have successfully transmitted one bit of information from you to them. Claude Shannon developed some mathematics, now often referred to as "Information Theory," that carefully quantified how bits of data transmitted accurately from a source to a recipient can reduce uncertainty by providing information. A great deal of the computer networking equipment and software in the world today and especially the huge linked worldwide network we call the Internet is primarily concerned with this one basic task of getting bits of information from a source to a destination.

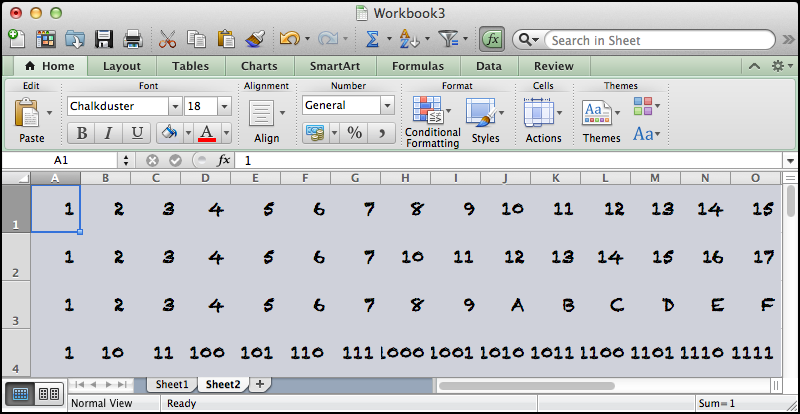

Once we are comfortable with the idea of a "Bit" as the most basic unit of information, either "yes" or "no," we can combine bits together to make more complicated structures. First, let’s switch labels just slightly. Instead of "no" we will start using zero, and instead of "yes" we will start using one. So we now have a single digit, albeit one that has only two possible states: zero or one (we’re temporarily making a rule against allowing any of the bigger digits like three or seven). This is in fact the origin of the word "bit," which is a squashed down version of the phrase "Binary digIT." A single binary digit can be 0 or 1, and that would give us just two options like “yes” or “no” but there is nothing stopping us from using more than one binary digit in our messages. Have a look at the example in the table below:

| MEANING | 2ND DIGIT | 1ST DIGIT |

|---|---|---|

| No | 0 | 0 |

| Maybe | 0 | 1 |

| Probably | 1 | 0 |

| Definitely | 1 | 1 |

Here we have started to use two binary digits two bits to create a "code book" for four different messages that we might want to transmit to our friend about her dinner party. If we were certain that we would not attend, we would send her the message 0 0. If we definitely planned to attend we would send her 1 1. But we have two additional possibilities, "Maybe" which is represented by 0 1, and "Probably" which is represented by 1 0. It is interesting to compare our original yes/no message of one bit with this new four-option message with two bits. In fact, every time you add a new bit you double the number of possible messages you can send. So three bits would give eight options and four bits would give 16 options. How many options would there be for five bits?

When we get up to eight bits which provides 256 different combinations we finally have something of a reasonably useful size to work with.Eight bits is commonly referred to as a "Byte" this term probably started out as a play on words with the word bit. (Try looking up the word "nibble" online!) A byte offers enough different combinations to encode all of the letters of the alphabet, including capital and small letters.

There is an old rulebook called "ASCII" the American Standard Code for Information Interchange which matches up patterns of eight bits with the letters of the alphabet, punctuation, and a few other odds and ends. For example the bit pattern 0100 0001 represents the capital letter A and the next higher pattern, 0100 0010, represents capital B. Try looking up an ASCII table online (for example, http://www.asciitable.com/) and you can find all of the combinations. Note that the codes may not actually be shown in binary because it is so difficult for people to read long strings of ones and zeroes. Instead you may see the equivalent codes shown in hexadecimal (base 16), octal (base 8), or the most familiar form that we all use everyday, base 10. Although you might remember base conversions from high school math class, some people find it fun to practice converting between binary, hexadecimal, and decimal (base 10). You might also enjoy Vi Hart’s Binary Hand Dance video in which she and her family count in binary on their fingers.

Most of the work we do in this book will be in decimal, but more complex work with data often requires understanding hexadecimal and being able to know how a hexadecimal number, like 0xA3, translates into a bit pattern. We don’t need that right now, though. If you are interested, you might try searching online for "binary conversion tutorial" and you will find lots of useful sites.

You have attempted of activities on this page.